As artificial intelligence (AI)-powered chatbots become increasingly common in healthcare settings, questions about their effectiveness and reliability continue to spark debate.

The World Health Organization (WHO) has introduced an AI health assistant, but a recent report says it's not always accurate. Experts say health chatbots could have a major impact on healthcare businesses, but their varying levels of accuracy raise serious questions about their potential to aid or undermine patient care. It has said.

“Medical chatbots, like other AI-powered tools, are trained thoroughly on high-quality, diverse datasets and provide highly accurate answers when user prompts are clear and simple. more likely,” said Julie McGuire, managing director of BDO Healthcare Centers. She is about excellence and innovation, she told PYMNTS. “However, when questions are more complex or unusual, medical chatbots may provide insufficient or inaccurate answers. may fabricate research to justify it.”

The rise of chatbot medics

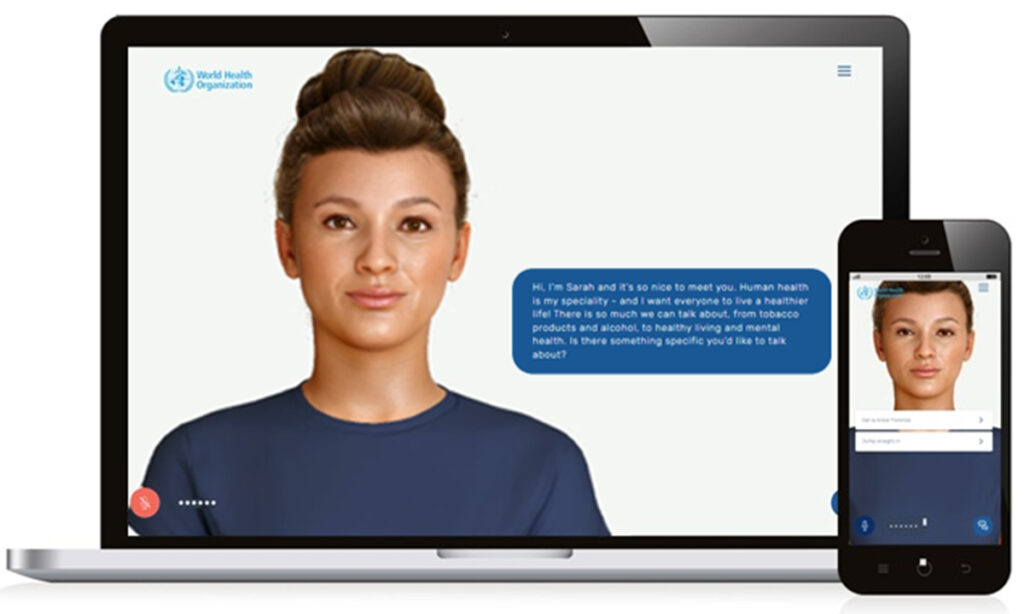

WHO's new tool, Smart AI Resource Assistant for Health (Sarah), has faced problems since its launch. The AI-powered chatbot provides health-related advice in eight languages, covering topics such as healthy eating, mental health, cancer, heart disease, and diabetes. Developed by New Zealand company Soul Machines, Sarah also incorporates facial recognition technology to provide more empathetic responses.

Although WHO says the AI bot is updated with the latest information from the organization and its trusted partners, Bloomberg recently reported that the AI bot does not include the latest US-based medical advisories or news events. It was reported.

The World Health Organization did not immediately respond to PYMNTS' request for comment.

AI chatbots for health are becoming increasingly popular. For example, Babylon Health's chatbot can assess symptoms, provide medical advice, and guide patients on whether to consult a doctor. Sensely's chatbot with avatars helps users access health insurance benefits and connects them directly to medical services. Ada Health aims to assist users by diagnosing conditions based on symptoms.

AI is useful for medical chatbots because it can analyze large amounts of data and quickly provide more personalized responses to patient inquiries, said Tim Lawless, global health leader at digital consulting firm Publicis Sapient. told PYMNTS. The intensity and specificity of responses from AI-powered chatbots like ChatGPT increases with the amount of data fed into them. Therefore, it is important to effectively integrate patient data into production systems, which could open the door to more powerful uses as the technology evolves, he said.

However, Lawless said the accuracy of medical chatbots varies and often depends on the amount and quality of data used for training. Responses from conversational AI tools like ChatGPT can be generic and less accurate if not provided with enough specific data.

“Although AI can quickly process and analyze large amounts of data, the interpretation and application of this data still requires human oversight to ensure the data is accurate,” he added. Ta. “This is especially true in the medical field, where the stakes are high and situations are often complex. The accuracy of medical chatbots also depends on their ability to understand and respond to the nuances of human language and emotion. ”

The future of medicine?

Talk to a bot and you may never need to go to the doctor. McGuire said chatbots will allow healthcare providers to provide unprecedented access to customized medical advice. Chatbot-driven detailed inquiries can also help healthcare providers connect patients with the specific medical services they need. He noted that chatbots could reduce the amount of time clinicians spend communicating with patients and alleviate some of the workload that currently causes clinician burnout.

“But chatbots are not a 'set it and forget it' solution,” McGuire said. “Healthcare providers should consider the liability that arises from the use of AI-powered medical chatbots. To maintain standards of accuracy and timeliness, healthcare providers must You must assign a dedicated clinician to review the responses. A trained medical chatbot is not yet a trained clinician.”

In some cases, observers say, chatbots are easier to talk to than humans. Lawless said chatbots can quickly simplify medical information and treatment plans, make things clearer for patients, and serve a broader population. Doctors often provide detailed explanations and support when patients are not in the best position to absorb information, such as immediately after a procedure. He said patients are usually ready to begin treatment after a few days. At times like this, if a patient has questions or is ready to process information, a medical chatbot can provide her with the support she needs around the clock.

“However, it is important to remember that while medical chatbots can provide valuable assistance, they are not a substitute for professional medical advice,” he added. “The integration of AI in healthcare also raises important concerns about data privacy and security, which must be addressed when deploying these tools.”

AI is not only changing the way patients interact with bots, but also the way doctors do their jobs. Ryan Gross, head of data and applications at Caylent, said chatbots like AWS HealthScribe can recognize speaker roles, categorize interactions, and identify medical terminology to create early clinical documentation. , he told PYMNTS. This technology streamlines the data collection and documentation process, allowing healthcare professionals to focus on patient care.

According to Gross, Amazon Bedrock's generative AI assists healthcare professionals by handling complex tasks, managing different types of data, and using customized language models. These agents can retrieve information from other medical datasets and tools, as well as external sources, and provide answers with scores for accuracy and contextual relevance.

“For example, a clinician can use the agent to determine a course of action for a patient with end-stage COPD,” Gross said. “Agents can access patient EHR records, imaging data, genetic data, and other relevant information to generate detailed responses. Agents can also use indexes built on Amazon Kendra to identify clinical trials, We can also search the pharmaceutical and biomedical literature to provide clinicians with the most accurate and relevant information to make informed decisions.”