Just two months after the launch of Gemini, the large-scale language model that Google hopes will take it to the top of the AI industry, the company has already announced its successor. Google today released Gemini 1.5, making it available to developers and enterprise users ahead of a full public rollout coming soon. The company has made it clear that it's fully committed to Gemini as a business tool, personal assistant, and everything in between, and it's moving hard on its plans.

Gemini 1.5 has many improvements. The general-purpose model of Google's system, the Gemini 1.5 Pro, is clearly on par with the company's recently launched high-end Gemini Ultra, and outperforms the Gemini 1.0 Pro by 87%. A look at the benchmark test. It is created using an increasingly popular technique known as “Mixture of Experts” (MoE). This means that instead of always processing the entire model when you submit a query, it only executes parts of the entire model. (Here's a nice explanation on the subject.) This approach should make the model faster to use, and Google should also run more efficiently.

But there's one new thing about Gemini 1.5 that has CEO Sundar Pichai and the entire company particularly excited. Gemini 1.5 has a huge context window, which means you can handle much larger queries and see more information at once. That window is a whopping 1 million tokens compared to OpenAI's GPT-4's 128,000 tokens and Gemini Pro's current 32,000 tokens. Tokens are a difficult metric to understand (here's a detailed breakdown), so Pichai simplified it by saying, “It's about 10-11 hours of video, tens of thousands of lines of code.” Context windows mean you can ask the AI bot questions about all your content at once.

(Pichai also said that Google researchers are testing a 10 million token context window, which is like a series of token context windows. game of thrones All at once. )

While explaining this to me, Pichai bluntly pointed out that the whole thing can be applied. Lord of the Ring Displays the trilogy in the context window. This seemed too specific so I asked him. This is already happening, right? Someone at Google will check to see if Gemini has spotted any continuity errors, tries to understand the complex genealogy of Middle-earth, and finally wonders if the AI will be able to figure out the meaning of Tom's Bombadil. I'm currently checking. “I'm sure it happened,” Pichai says with a laugh. “Or it's going to happen, one of two things.”

Pichai also believes that expanding the context window can be very helpful for businesses. “This enables use cases where you can add a lot of personal context and information at the moment of the query,” he says. “Think of how the query window has expanded dramatically.” He imagines that filmmakers might upload entire films and ask Gemini for their critics' opinions. He sees companies using Gemini to examine large amounts of financial records. “I think this is one of the great advances we've made,” he says.

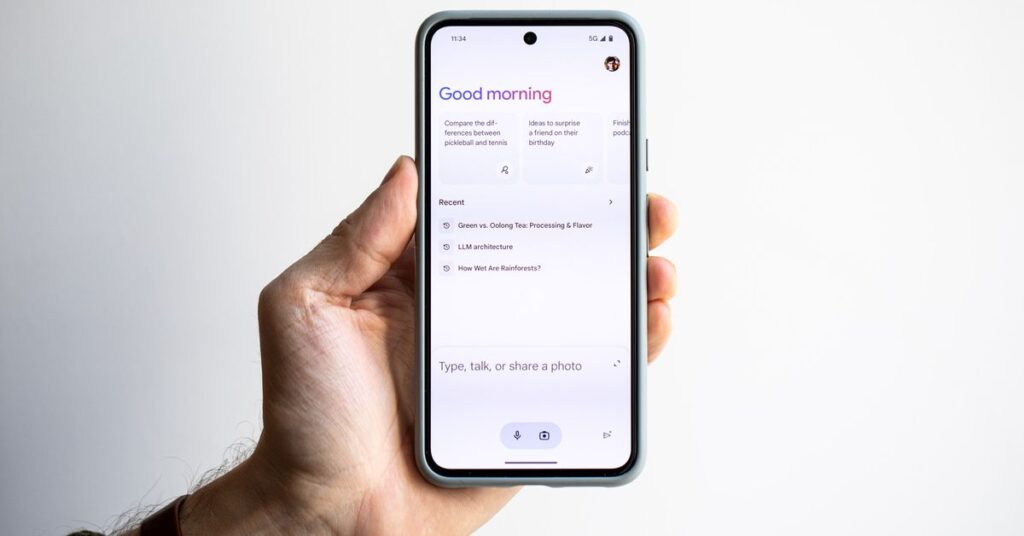

At this time, Gemini 1.5 is only available to business users and developers through Google's Vertex AI and AI Studio. Eventually it will be replaced by Gemini 1.0, and the standard version of Gemini Pro (the version available to everyone on gemini.google.com and the company's apps) will be 1.5 Pro with a 128,000 token context window. You will need to pay an additional fee to reach 1 million. Google is also testing the safety and ethical boundaries of the model, especially with regard to the new and larger context window.

As companies around the world seek to find their own AI strategies, Google is currently in a fierce competition to build the best AI tools and has no plans to sign developer agreements with OpenAI, Google, or other companies. I'm considering whether or not I should tie it. Just this week, OpenAI announced his ChatGPT “memory” and appears to be preparing for a foray into web search. So far, Gemini looks impressive, especially for those already in Google's ecosystem, but there's a lot of work left to do on all fronts.

Ultimately, Pichai tells me, these 1.0 vs. 1.5, pro vs. ultra, and corporate battles will matter less to users. “People will just consume the experience,” he says. “It's like using a smartphone without constantly paying attention to the processor underneath it.” But for now, everyone should be aware of the chip inside their phone because it matters. He says he's at the stage of knowing. “The underlying technology is changing very rapidly,” he says. “People care.”