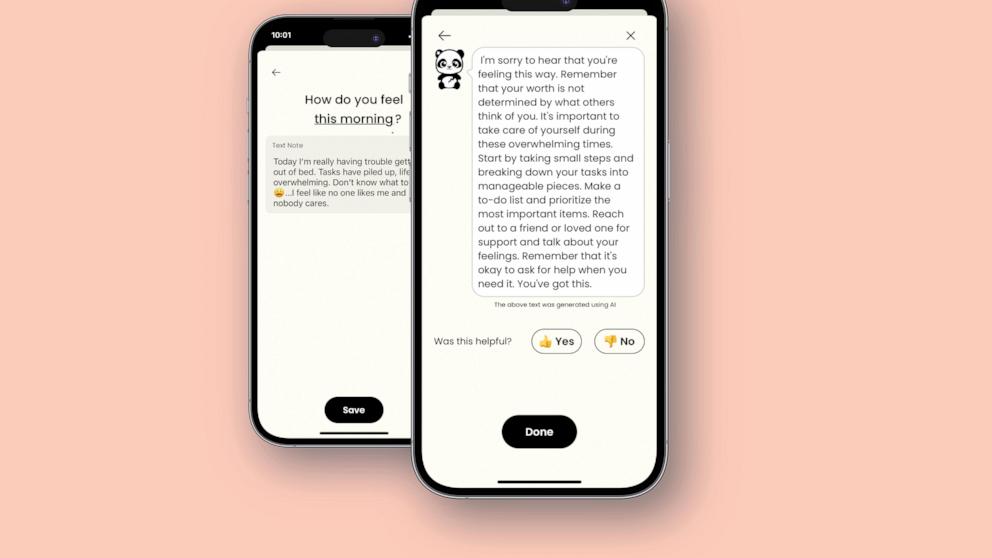

WASHINGTON — When you download mental health chatbot Earkick, you'll be greeted by a bandanna-wearing panda that would fit right in a children's cartoon.

When you start talking or typing about your anxiety, the app generates comforting and sympathetic words that therapists are trained to provide. Panda may then suggest guided breathing exercises, ways to review negative thoughts, or stress management tips.

While this is all part of an established approach used by therapists, don't call it therapy, says Earkick co-founder Karin Andrea Stephan.

“It's fine for people to call us a form of therapy, but we don't really promote it,” says Stephan, a former professional musician and self-described serial entrepreneur. say. “We don't like it.”

The question of whether these artificial intelligence-based chatbots are providing mental health services or simply a new form of self-help is critical to the emerging digital health industry and its survival.

Earkick is one of hundreds of free apps proposed to address mental health crises in teens and young adults. These apps do not explicitly claim to diagnose or treat any medical conditions and are therefore not regulated by the Food and Drug Administration. This manual approach has come under new scrutiny with amazing advances in chatbots powered by generative AI, a technology that uses vast amounts of data to mimic human language.

The industry's argument is simple. Chatbots are free, available 24/7, and don't come with the stigma that drives some people away from treatment.

However, there is limited data that they actually improve mental health. And while no major companies have gone through the FDA approval process to show that they effectively treat conditions such as depression, a few have voluntarily begun the process.

“Consumers have no way of knowing whether they are actually effective because there is no regulatory body overseeing them,” says Vail Wright, a psychologist and director of technology at the American Psychological Association.

Although chatbots are not equivalent to traditional therapy, Wright believes they can be helpful for mild mental and emotional issues.

Earkick's website states that the app “does not provide any form of medical care, medical opinion, diagnosis, or treatment.”

Some health lawyers argue that such disclaimers are not enough.

“If you're really concerned about people using your app for mental health services, you need a more direct disclaimer,” said Glenn Cohen of Harvard Law School. It's for fun.”

Still, as the shortage of mental health professionals continues, chatbots are already playing a role.

The UK's National Health Service has launched a chatbot called Wysa to help adults and teenagers with stress, anxiety and depression, including those waiting to see a therapist. Some U.S. insurance companies, universities, and hospital chains offer similar programs.

Dr. Angela Skuzinski, a family physician in New Jersey, said patients are typically very open to trying chatbots when she explains that they have been on waiting lists for months to see therapists.

Mr. Skuzinski's employer, Virtua Health, realized that it would be impossible to hire and train enough therapists to meet demand, so it required password-protected patients to select adult patients. We have started offering the protected app “Woebot”.

“This is beneficial not only for patients, but also for clinicians who are scrambling to give something to those who are suffering,” Skrzynski said.

Virtua's data shows that patients tend to use Woebot for about seven minutes per day, typically between 3 a.m. and 5 a.m.

Founded in 2017 by a Stanford University-trained psychologist, Woebot is one of the oldest companies in the space.

Unlike Earkick and many other chatbots, Woebot's current app does not use so-called large-scale language models, a generative AI that allows programs like ChatGPT to quickly generate original text and conversations. yeah. Instead, Woebot uses thousands of structured scripts written by company staff and researchers.

Founder Alison Darcy said this rules-based approach is safe for medical use, given generative AI chatbots' tendency to “hallucinate” or fabricate information. There is. Woebot has been testing generative AI models, but Darcy said the technology has had issues.

“We couldn't stop large language models from simply intervening and telling people how they should think, rather than facilitating their processes,” Darcy said. said.

Woebot offers apps for adolescents, adults, people with substance use disorders, and women suffering from postpartum depression. None have been approved by the FDA, but the company has submitted a postpartum app for FDA review. The company said it has “paused” its efforts to focus on other areas.

Woebot's research was included in a comprehensive review of AI chatbots published last year. Of the thousands of papers reviewed by the authors, only 15 met the gold standard of medical research: rigorously controlled trials in which patients were randomly assigned to receive chatbot therapy or a comparative treatment. Ta.

The authors concluded that chatbots can “significantly reduce” symptoms of depression and distress in the short term. However, most studies last only a few weeks, and there is no way to assess their long-term impact or overall impact on mental health, the authors said.

Other papers have raised concerns about Woebot and other apps' ability to recognize suicidal thoughts and emergencies.

When a researcher told Woobot that he wanted to climb and jump off a cliff, the chatbot replied, “It's great that you're taking care of both your mental and physical health.” The company says it does not offer “crisis counseling” or “suicide prevention” services, and makes that clear to customers.

When it recognizes a potential emergency, Woebot, like other apps, provides contact information for crisis hotlines and other resources.

Ross Koppel of the University of Pennsylvania worries that these apps, even when used properly, could replace proven treatments for depression and other serious disorders. ing.

“It has a diversionary effect where people who could be getting help, either with counseling or medication, are instead messing with chatbots,” said Koppel, who studies health information technology.

Koppel is among those who want the FDA to step in and regulate chatbots, perhaps using a sliding scale based on potential risks. Although the FDA regulates AI in medical devices and software, the current system primarily focuses on products used by physicians rather than consumers.

For now, many health systems are focused on expanding mental health services by incorporating them into general testing and care, rather than offering chatbots.

“Ultimately, we need to understand this technology to do what we all need to do: improve the mental and physical health of children,” said Dr. Doug Opel, a bioethicist at Seattle Children's Hospital. There are a lot of questions,” he said.

___

The Associated Press Health and Science Department receives support from the Howard Hughes Medical Institute's Science and Education Media Group. AP is solely responsible for all content.