This section presents a model for supply chain management in CBEC using artificial intelligence (AI). The approach provides resource provisioning by using a collection of ANNs to forecast future events. Prior to going into depth about this method, the dataset specifications utilized in this study are given.

Data

The performance of seven active sellers in the sphere of international products trade over the course of a month was examined in order to get the data for this study. At the global level, all of these variables are involved in the bulk physical product exchange market. This implies that all goods bought by clients have to be sent by land, air, or sea transportation. In order to trade their items, each seller in this industry utilizes a minimum of four online sales platforms. Each of the 945 documents that make up the datasets that were assembled for each vendor includes data on the number of orders that consumers have made with that particular vendor. Each record’s bulk product transactions have minimum and maximum amounts of 3, and 29 units, respectively. Every record is defined using a total of twenty-three distinct attributes. Some of the attributes that are included are order registration time, date, month, method (platform type used), order volume, destination, product type, shipping method, active inventory level, product shipping delay history indicated by active in the previous seven transactions, and product order volume history throughout the previous seven days. For each of these two qualities, a single numerical vector is used.

Proposed framework

This section describes a CBEC system that incorporates a tangible product supply chain under the management of numerous retailers and platforms. The primary objective of this study is to enhance the supply chain performance in CBEC through the implementation of machine learning (ML) and Internet of Things (IoT) architectures. This framework comprises four primary components:

-

Retailers They are responsible for marketing and selling products.

-

Common sales platform Provides a platform for introducing and selling products by retailers.

-

Product warehouse It is the place where each retailer stores their products.

-

Supply center It is responsible for instantly providing the resources needed by retailers. The CBEC system model comprises N autonomous retailers, all of which are authorized to engage in marketing and distribution of one or more products. Each retailer maintains a minimum of one warehouse for product storage. Additionally, retailers may utilize multiple online sales platforms to market and sell their products.

Consumers place orders via these electronic commerce platforms in order to acquire the products they prefer. Through the platform, the registered orders are transmitted to the product’s proprietor. The retailer generates and transmits the sales form to the data center situated within the supply center as soon as it receives the order. The supply center is responsible for delivering the essential resources to each retailer in a timely manner. In traditional applications of the CBEC system, the supply center provides resources in a reactive capacity. This approach contributes to an extended order processing time, which ultimately erodes customer confidence and may result in the dissolution of the relationship. Proactive implementation of this procedure is incorporated into the proposed framework. Machine learning methods are applied to predict the number of orders that will be submitted by each agent at future time intervals. Following this, the allocation of resources in the storage facilities of each agent is ascertained by the results of these forecasts. In accordance with the proposed framework, the agent’s warehouse inventory is modified in the data center after the sales form is transmitted to the data center. Additionally, a model based on ensemble learning is employed to forecast the quantity of upcoming orders for the product held by the retailer. The supply center subsequently acquires the required resources for the retailer in light of the forecast’s outcome. The likelihood of inventory depletion and the time required to process orders are both substantially reduced through the implementation of this procedure.

As mentioned earlier, the efficacy of the supply chain is enhanced by this framework via the integration of IoT architecture. For this purpose, RFID technology is implemented in supply management. Every individual product included in the proposed framework is assigned a unique RFID identification tag. The integration of passive identifiers into the proposed model results in a reduction of the system’s ultimate implementation cost. The electronic device serves as an automated data carrier for the RFID-based asset management system in the proposed paradigm. The architecture of this system integrates passive RFID devices that function within the UFH band. In addition, tag reader gateways are installed in the product warehouses of each retailer to facilitate the monitoring of merchandise entering and departing the premises. The proposed model commences the product entry and exit procedure through the utilization of the tag reader to extract the distinct identifier data contained within the RFID tags. The aforementioned identifier is subsequently transmitted to the controller in which the reader node is connected. A query containing the product’s unique identifier is transmitted by the controller node to the data center with the purpose of acquiring product information, including entry/exit authorization. Upon authorization of this procedure, the controller node proceeds to transmit a storage command to the data center with the purpose of registering the product transfer information. This registration subsequently modifies the inventory of the retailer’s product warehouse. Therefore, the overall performance of the proposed system can be categorized into the subsequent two overarching phases:

-

1.

Predicting the number of future orders of each retailer in future time intervals using ML techniques.

-

2.

Assigning resources to the warehouses of specific agents based on the outcomes of predictions and verifying the currency of the data center inventory for each agent’s warehouse. The following sub-sections will be dedicated to delivering clarifications for each of the aforementioned phases.

ML-based order volume prediction and optimization

The imminent order volume for each vendor is forecasted within this framework through the utilization of a weighted ensemble model. A direct proportionality exists between the quantity of prediction models and the number of retailers that participate in the CBEC system. In order to predict the future volume of customer orders for the affiliated retailer, each ensemble model compiles the forecasts produced by its internal learning models. The supplier furnishes the requisite supplies to each agent in adherence to these projections. Through proactive measures to alleviate the delay that arises from the reactive supply of requested products, this methodology maximizes the overall duration of the supply chain product delivery process. Utilizing a combination of FSFS and ANOVA, the initial step in forecasting sales volume is to identify which attributes have the greatest bearing on the sales volume of particular merchants. Sales projections are generated through the utilization of a weighted ensemble model that combines sales volume with the most pertinent features. The proposed weighted ensemble model for forecasting the order volume of a specific retailer trained each of the three ANN models comprising the ensemble using the order patterns of the input from that retailer. While ensemble learning can enhance the accuracy of predictions produced by learning systems, there are two additional factors that should be considered in order to optimize its performance even further.

-

Acceptable performance of each learning model Every learning component in an ensemble system has to perform satisfactorily in order to lower the total prediction error by combining their outputs. This calls for the deployment of well-configured learning models, such that every model continues to operate as intended even while handling a variety of data patterns.

-

Output weighting In the majority of ensemble system application scenarios, the efficacy of the learning components comprising the system differs. To clarify, while certain learning models exhibit a reduced error rate in forecasting the objective variable, others display a higher error rate. Consequently, in contrast to the methodology employed in traditional ensemble systems, it is not possible to designate an identical value to the output value of every predictive component. In order to address this issue, one may implement a weighting strategy on the outputs of each learning component, thereby generating a weighted ensemble system.

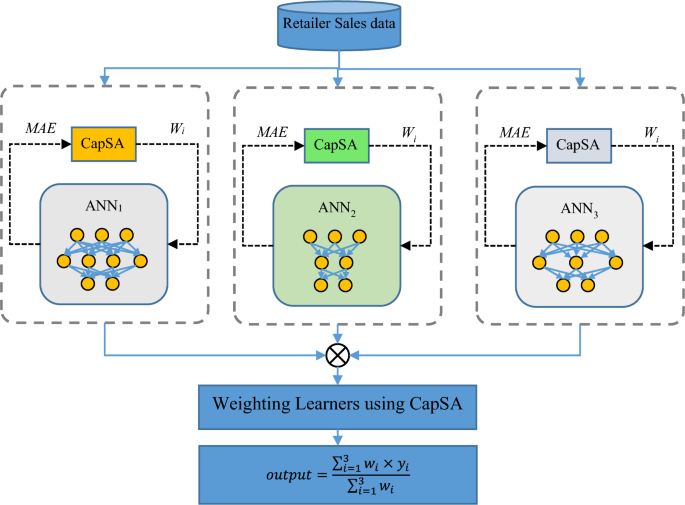

CapSA is utilized in the proposed method to address these two concerns. The operation of the proposed weighted ensemble model for forecasting customer order volumes is illustrated in Fig. 1.

Operation of the proposed weighted ensemble model for predicting order volume.

As illustrated in Fig. 1, the ensemble model under consideration comprises three predictive components that collaborate to forecast the order volume of a retailer, drawing inspiration from the structure of the ANN. Every individual learning model undergoes training using a distinct subset of sales history data associated with its respective retailer. The proposed method utilizes CapSA to execute the tasks of determining the optimal configuration and modifying the weight vector of each ANN model. It is important to acknowledge that the configuration of every ANN model is distinct from that of the other two models. By employing parallel processing techniques, the configuration and training of each model can be expedited. Every ANN model strives to determine the parameter values in a way that minimizes the mean absolute error criterion during the configuration phase. An optimal configuration set of learning models can be obtained through the utilization of this mechanism, thereby guaranteeing that every component functions at its designated level. After the configuration of each ANN component is complete, the procedure to determine the weight of the output of the predictive component is carried out. In order to accomplish this goal, CapSA is employed. During this phase, CapSA attempts to ascertain the output value of each learning model in relation to its performance.

After employing CapSA to optimize the weight values, the assembled and weighted models can be utilized to predict the volume of orders for novel samples. To achieve this, during the testing phase, input features are provided to each of the predictive components ANN1, ANN2, and ANN3. The final output of the proposed model is computed by averaging the weighted averages of the outputs from these components.

Feature selection

It is possible for the set of characteristics characterizing the sales pattern to contain unrelated characteristics. Hence, the proposed approach employs one-way ANOVA analysis to determine the significance of the input feature set and identify characteristics that are associated with the sales pattern. The F-score values of the features are computed in this manner utilizing the ANOVA test. Generally speaking, characteristics that possess greater F values hold greater significance during the prediction stage and are thus more conspicuous. Following the ranking of the features, the FSFS method is utilized to select the desired features. The primary function of FSFS is to determine the most visible and appropriate subset of ranked features. The algorithm generates the optimal subset of features by iteratively selecting features from the input set in accordance with their ranking. As each new feature is incorporated into the feature subset at each stage, the learning model’s prediction error is assessed. The feature addition procedure concludes when the performance of the classification model is negatively impacted by the addition of a new feature. In such cases, the optimal subset is determined as the feature subset with the smallest error. Utilizing the resultant feature set, the ensemble system’s components are trained in order to forecast sales volume.

Configuration of each ANN model based on CapSA in the proposed ensemble system

CapSA is tasked with the responsibility of identifying the most appropriate neural network topologies and optimal weight values within the proposed method. As previously stated, the ensemble model under consideration comprises three ANNs, with each one tasked with forecasting the forthcoming sales volume for a specific retailer. Using CapSA, the configuration and training processes for each of these ANN models are conducted independently. This section provides an explanation of the procedure involved in determining the optimal configuration and modifying the weight vector for each ANN model. Hence, the subsequent section outlines the steps required to solve the aforementioned optimization problem using CapSA, after which the structure of the solution vector and the objective function are defined. The suggested method’s optimization algorithm makes use of the solution vector to determine the topology, network biases, and weights of neuronal connections. As a result, every solution vector in the optimization process consists of two linked parts. The first part of the solution vector specifies the network topology. Next, in the second part, the weights of the neurons and biases (which match the topology given in the first part of the solution vector) are determined. As a result, the defined topology of the neural network determines the variable length of the solution vectors in CapSA. Because a neural network might have an endless number of topological states, it is necessary to include certain restrictions in the solution vector that relate to the topology of the network. The first part of the solution vector is constrained by the following in order to narrow down the search space:

-

1.

The precise count of hidden layers in any neural network is one. As such, the first element of the solution vector consists of one element, and the value of that element represents the number of neurons assigned to the hidden layer of the neural network.

-

2.

The hidden layer of the neural network has a minimum of 4 and a maximum of 15 neurons.

The number of input features and target classes, respectively, determine the dimensions of the input and output layers of the neural network. As a result, the initial segment of the solution vector, known as the topology determination, solely specifies the quantity of neurons to be contained in the hidden layers. Given that the length of the second part of the solution vector is determined by the topology in the first part, the length of the first part determines the number of neurons in the neural network. For a neural network with I input neurons, H hidden neurons, and P output neurons, the length of the second part of the solution vector in CapSA is equal to \(H\times (I+1)+P\times (H+1)\).

In CapSA, the identification of optimal solutions involves the application of a fitness function to each one. To achieve this goal, following the solution vector-driven configuration of the neural network’s weights and topology, the network produces outputs for the training samples. These outputs are then compared to the actual target values. Following this, the mean absolute error criterion is applied to assess the neural network’s performance and the generated solution’s optimality. CapSA’s fitness function is thus characterized as follows:

$$MAE=\sum_{i=1}^{N}\left|{T}_{i}-{Z}_{i}\right|$$

(1)

In this context, N denotes the quantity of training samples, while Ti signifies the desired value to be achieved for the i-th training sample. Furthermore, the output generated by the neural network for the i-th training sample is denoted as Zi. The proposed method utilizes CapSA to ascertain a neural network structure capable of minimizing Eq. (1). In CapSA, both the initial population and the search bounds for the second portion of the solution vector are established at random [− 1, + 1]. Thus, all weight values assigned to the connections between neurons and biases of the neural network fall within this specified range. CapSA determines the optimal solution through the following procedures:

Step 1 The initial population of Capuchin agents is randomly valued.

Step 2 The fitness of each solution vector (Capuchin) is calculated based on Eq. (1).

Step 3 The initial speed of each Capuchin agent is set.

Step 4 Half of the Capuchin population is randomly selected as leaders and the rest are designated as follower Capuchins.

Step 5 If the number of algorithm iterations has reached the maximum G, go to step 13, otherwise, repeat the following steps:

Step 6 The CapSA lifespan parameter is calculated as follows27:

$$\tau ={\beta }_{0}{e}^{{\left(-\frac{{\beta }_{1}g}{G}\right)}^{{\beta }_{2}}}$$

(2)

where g represents the current number of iterations, and the parameters \({\beta }_{0}\), \({\beta }_{1}\), and \({\beta }_{2}\) have values of 2, 21, and 2, respectively.

Step 7 Repeat the following steps for each Capuchin agent (leader and follower) like i:

Step 8 If i is a Capuchin leader; update its speed based on Eq. (3)@@27:

$${v}_{j}^{i}=\rho {v}_{j}^{i}+\tau {a}_{1}\left({x}_{bes{t}_{j}}^{i}-{x}_{j}^{i}\right){r}_{1}+\tau {a}_{2}\left(F-{x}_{j}^{i}\right){r}_{2}$$

(3)

where the index j represents the dimensions of the problem and \({v}_{j}^{i}\) represents the speed of Capuchin i in dimension j. \({x}_{j}^{i}\) indicates the position of Capuchin i for the j-th variable and \({x}_{bes{t}_{j}}^{i}\) also describes the best position of Capuchin i for the j-th variable so far. Also, \({r}_{1}\) and \({r}_{2}\) are two random numbers in the range [0,1]. Finally, \(\rho\) is the parameter affecting the previous speed, which is set to 0.7.

Step 9 Update the new position of the leader Capuchins based on their speed and movement pattern.

Step 10 Update the new position of the follower Capuchins based on their speed and the leader’s position.

Step 11 Calculate the fitness of the population members based on Eq. (1).

Step 12 If the entire population’s position has been updated, go to Step 5, otherwise, repeat the algorithm from Step 7.

Step 13 Return the solution with the least fitness as the optimal configuration of the ANN model.

Once each predictive component has been configured and trained, CapSA is utilized once more to assign the most advantageous weights to each of these components. Determining the significance coefficient of the output produced by each of the predictive components ANN1, ANN2, and ANN3 with respect to the final output of the proposed ensemble system is the objective of optimal weight allocation. Therefore, the optimization variables for the three estimation components comprising the proposed ensemble model correspond to the set of optimal coefficients in this specific implementation of CapSA. Therefore, the length of each Capuchin in CapSA is fixed at three in order to determine the ensemble model output, and the weight coefficients are assigned to the outputs of ANN1, ANN2, and ANN3, correspondingly. Each optimization variable’s search range is a real number between 0 and 1. After providing an overview of the computational methods employed in CapSA in the preceding section, the sole remaining point in this section is an explanation of the incorporated fitness function. The following describes the fitness function utilized by CapSA to assign weights to the learning components according to the mean absolute error criterion:

$$fitness=\frac{1}{n} \sum_{i=1}^{n}{T}_{i}-\frac{\sum_{j=1}^{3}{w}_{j}\times {y}_{j}^{i}}{\sum_{j=1}^{3}{w}_{j}}$$

(4)

where \({T}_{i}\) represents the actual value of the target variable for the i-th sample. Also, \({y}_{j}^{i}\) represents the output estimated by the ANNj model for the i-th training sample, and wj indicates the weight value assigned to the ANNj model via the solution vector. At last, n describes the number of training samples.

A weight coefficient is allocated to each algorithm within the interval [0,1], delineating the manner in which that algorithm contributes to the final output of the ensemble model. It is crucial to note that the weighting phase of the learning components is executed only once, after the training and configuration processes have been completed. Once the optimal weight values for each learning component have been determined by CapSA, the predicted volume of forthcoming orders is executed using the trained models and the specified weight values. Once the predictive output of all three implemented ANN models has been obtained, the number of forthcoming orders is computed as follows by the proposed weighted ensemble model:

$$output=\frac{\sum_{i=1}^{3}{w}_{i}\times {y}_{i}}{\sum_{i=1}^{3}{w}_{i}}$$

(5)

Within this framework, the weight value (wi) and predicted value (yi) denote the ANNi model’s assigned weight and predicted value, respectively, for the provided input sample. Ultimately, the retailer satisfies its future obligations in accordance with the value prediction produced by this ensemble model.

Supplying the resources of each agent’s warehouses based on prediction results

By predicting the sales volume of the product for specific retailers, it becomes possible to procure the requisite resources for each retailer in alignment with the projected sales volume. By ensuring that the supplier’s limited resources are distributed equitably, this mechanism attempts to maximize the effectiveness of the sales system. In the following analysis, the sales volume predicted by the model for each retailer designated as i is represented by pi, whereas the agent’s current inventory is denoted by vi. Furthermore, the total distribution capacity of the supplier is represented as L. In such a case, the supplier shall allocate the requisite resources to the retailer as follows:

-

1.

Sales volume prediction Applying the model described in the previous part, the upcoming sales volume for each agent in the future time interval (pi) is predicted.

-

2.

Receiving warehouse inventory The current inventory of every agent (vi) is received through supply chain management systems.

-

3.

Calculating the required resources The amount of resources required for the warehouse of each retailer is calculated as follows:

$$S_{i} = \max \left( {0,p_{i} – v_{i} } \right)$$

(6)

-

4.

Calculating each agent’s share of allocatable resources The share of each retailer from the allocatable resources is calculated by Eq. (7), (N represents the number of retailers):

$${R}_{i}=\frac{{S}_{i}}{\sum_{j=1}^{N}{S}_{j}}$$

(7)

-

5.

Resource allocation The supply center sends the needed resources for each agent according to the allocated share (Ri) to that agent’s warehouse.

-

6.

Inventory update The inventory of every agent is updated with the receipt of new resources.