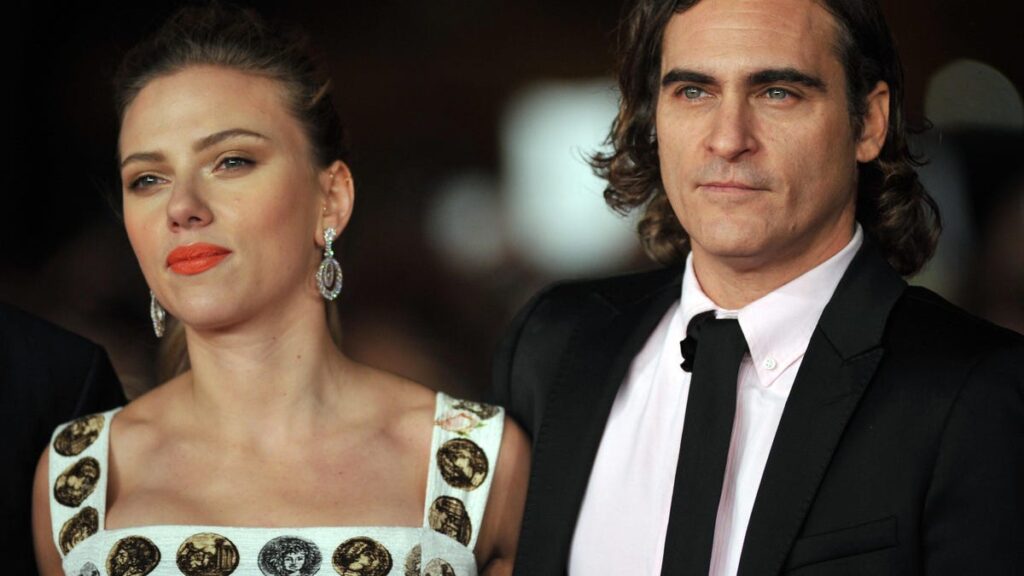

OpenAI CEO Sam Altman and his team recently wrote a nearly 740-word blog post defending themselves against a claim by Scarlett Johansson. The actor had called out the company for apparently co-opting her voice to use as one of the “five distinct voices” in its latest AI engine.

“We believe that AI voices should not deliberately mimic a celebrity’s distinctive voice — Sky’s voice is not an imitation of Scarlett Johansson but belongs to a different professional actress using her own natural speaking voice,” the company said in a statement before releasing the blog post explaining how its Voice Mode voices were chosen.

OpenAI said it put out a casting call and got submissions from more than 400 voice and screen actors, which they narrowed down to 14 finalists before deciding on the final five voices for modes called Breeze, Cove, Ember, Juniper and Sky. The company said it wanted voices that felt timeless, approachable, natural, easy to listen to, warm, engaging, confidence inspiring and charismatic, with a rich tone.

All that sounds reasonable, except for the fact that Johansson told NPR that she was “shocked, angered and in disbelief,” because Altman and company had approached her — twice — about licensing her voice and she’d said no.

Johansson said that the first time, in September, Altman told her that her voice would be “comforting to people” who are uncertain about the technology. The second time, she said, was two days before the debut of new Voice Mode capabilities in OpenAI’s ChatGPT chatbot, at a May 13 company event. OpenAI asked if Johannson would reconsider, but then it didn’t wait to connect with her before demoing the voice as part of new audio features in ChatGPT-4o.

“The demonstration of OpenAI’s virtual assistant quickly drew comparisons to Johansson’s character from the 2013 movie Her,” CNET’s Sareena Dayaram reported. “Johansson played Samantha, a virtual assistant that develops an intimate relationship with a lonely writer.”

NPR noted that “Altman, who has said the Spike Jonze film is his favorite movie, invited comparisons by posting the word ‘Her’ on X after the company announced the new ChatGPT version.” OpenAI dropped the voice on May 19, saying in an X post that it was working on addressing “questions about how we chose voices in ChatGPT.”

As for Johansson, her lawyers sent two letters, but it’s not yet known whether she’ll pursue a lawsuit. Still, the actor, who has starred in notable sci-fi flicks including Lucy and Ghost in the Shell, and as Black Widow in the Marvel Cinematic Universe, told NPR she felt compelled to call out the AI startup for its decision to “pursue a voice that sounded so eerily similar to mine that my closest friends and news outlets could not tell the difference.”

Why would Altman call out Her, try to get Johansson to change her mind, and then have his company deny that it didn’t intend to “deliberately mimic” a celebrity voice? Tech publication The Information put it down to entrepreneurial hubris, in a story titled “Sam Altman’s Naughty Side.” It said: “To some people steeped in Silicon Valley startup culture, Altman’s actions were a virtue from the founder playbook: Find creative solutions to getting what you want, even when people say no. For years, that has been a trait that top VCs look for.”

But The Information also added, after talking to lawyers, that OpenAI may be guilty of “appropriation of likeness.” That’s defined as using someone’s name, likeness, voice, nickname — aspects of their personal identity — to make money without their permission.

Other actors are licensing themselves for AI use cases, including James Earl Jones, who’s given permission to use his voice for future Darth Vader roles. Creative Artists Agency is also working on making “digital doubles” of some of its A-list clients.

Whether or not you buy OpenAI’s explanation, I agree with Bloomberg that “Scarlett Johansson proves she’s nobody’s chatbot,” and with The Washington Post’s Shira Ovide, who added the Johansson incident to the Top 7 list of “most boneheaded self-owns” by a tech company.

“OpenAI is already being sued over stealing others’ work, which has called into question the foundation of this new generation of AI,” Ovide reminded us. “Altman also has a reputation for running roughshod over people who stand in his way. Now OpenAI is accused of ripping off a well-known (and previously litigious) celebrity and being duplicitous about it.”

When it comes to AI, every day is truly an adventure. (Reminder, if you want to stay up to date on the latest in AI doings, check out CNET’s AI Atlas consumer hub.)

Here are the other doings in AI worth your attention.

Microsoft says its ‘Copilot Plus’ PCs deliver computers that understand you

“There’s a lot of AI looking over your shoulder in your future.”

That’s how CNET’s Lori Grunin summarized the news from Microsoft last week that the company is building AI directly into Windows-based computers, with its Copilot AI software set to power a new generation of laptops. Those laptops will be available June 18, starting at about a thousand bucks, from top PC makers including Acer, Asus, Dell, HP, Lenovo and Samsung. Those computers, as well as new Microsoft Surface laptops, represent a new category of Windows-based PCs that Microsoft is calling “Copilot Plus” PCs.

The AI will be powered by Qualcomm Snapdragon chips, including a Neural Processing Unit, or NPU, specifically designed to work with other PC processors to handle the generative AI capabilities. Having that specialized hardware means all the functions made possible by AI assistants like Copilot can be handled by the laptop rather than in the cloud — so you don’t need to be connected to the internet to access that functionality.

“The ability to crunch AI data directly on the computer lets Copilot+ include a feature called ‘Recall,'” Reuters explained. “‘Recall’ tracks everything done on the computer, from Web browsing to voice chats, creating a history stored on the computer that the user can search when they need to remember something they did, even months later.”

Microsoft unveiled the PCs at its annual Build conference. Yusuf Mehdi, the tech giant’s consumer marketing chief, said the company expects that 50 million AI PCs will be sold over the next year. Microsoft’s Windows operating system is the dominant computer OS and powers more than 70% of the world’s computers, according to Statista.

Microsoft CEO Satya Nadella told The Wall Street Journal that the Copilot Plus PCs are the start of bringing gen AI–based intelligent agents to all types of devices, from computers to phones to glasses. Said Nadella, “The future I see is a computer that understands me versus a computer that I have to understand.”

OpenAI talks safety as tech giants agree on AI ‘kill switch’

Before commenting on the Scarlett Johansson news, OpenAI was already defending itself in light of reports that the exit of some senior leaders, including chief scientist and co-founder Ilya Sutskever, had led to questions about how the work of a key security team would be handled. That team has been “focused on ensuring the safety of possible future ultra-capable artificial intelligence,” as Bloomberg put it. The more-capable AI in question is artificial general intelligence, or AGI, a yet-to-be-developed technology that would have humanlike smarts and the ability to learn.

Instead of that “alignment, superalignment” team remaining a standalone group, its work will be integrated into safety efforts being handled by different research teams, according to reports by Bloomberg and Axios. Axios reminded us that “in the AI industry, ‘alignment’ refers to efforts to make sure that AI programs share the same goals as the people who use them. ‘Superalignment’ typically refers to making sure that the most advanced AGI projects that OpenAI and others in the industry hope to develop do not turn on their creators.”

Jan Leike, one of the alignment, superalignment team leaders, posted on X after his departure, saying he didn’t think OpenAI was on the right trajectory as it works toward building an AGI. “Building smarter-than-human machines is an inherently dangerous endeavor. OpenAI is shouldering an enormous responsibility on behalf of all of humanity,” he wrote. “But over the past years, safety culture and processes have taken a backseat to shiny products.”

Both OpenAI CEO Sam Altman and President Greg Brockman posted responses on X to Lieke’s posts. Altman said Leike was “right we have a lot more to do.” Brockman said that though “there’s no proven playbook for how to navigate the path to AGI,” OpenAI has been putting in place “the foundations needed for safe deployment of increasingly capable systems.”

Meanwhile, OpenAI has joined other AI makers, including Amazon, Google, Meta, Microsoft and Samsung, in agreeing to a series of voluntary, nonbinding commitments and promising to make investments in research, testing and safety. The UK’s Department for Science, Innovation & Technology noted the companies’ sign-on to the nonbinding commitments last week, during a summit in Seoul, South Korea, focused on AI.

The companies also agreed to build a “kill switch” into their AIs, CNET’s Ian Sherr and others reported, effectively allowing them to shut down their systems in the case of a catastrophe. The AI Seoul Summit was a follow-up to a November gathering of world leaders at Bletchley Park in the UK, “where participating countries agreed to work together to contain the potentially ‘catastrophic’ risks posed by galloping advances in AI,” NPR said.

In spite of the promises made at the AI Seoul Summit, AI experts continue to raise alarms. “Society’s response, despite promising first steps, is incommensurate with the possibility of rapid, transformative progress that is expected by many experts,” a group of 15 specialists, including AI pioneer Geoffrey Hinton, wrote in the journal Science last week. “There is a responsible path — if we have the wisdom to take it.”

It’s that last part — the wisdom to take it — that has me worried.

Humane, maker of the AI Pin, may be up for sale for a billion dollars

A month after Humane launched its $699 AI Pin to pretty dismal reviews from CNET and others, Bloomberg cited anonymous sources in reporting that the wearable startup is looking for a buyer willing to pay $1 billion for the company.

Humane, started by former members of Apple’s design team, has hired a financial adviser to consult on the sale, Bloomberg said, adding that an acquisition wasn’t certain. Humane didn’t reply to a CNET request for comment.

“Usage of AI technologies has exploded in the past two years since the startup OpenAI launched its ChatGPT AI chatbot for public use,” CNET’s Ian Sherr said in reporting on the possible sale. Instead of a software-based chatbot, Sherr said, “Humane pitches its AI through a square-shaped, smooth-edged device designed to be worn on shirts or bag straps, with its cameras and sensors facing out toward the world. Humane says the device is designed to offer a next step after smartphones, with people using AI to interact with apps and services, like hailing an Uber or listening to music.”

In addition to its rather hefty starting price, the AI Pin requires a $24 per month subscription for wireless service. After getting customer feedback, the company said it’s working to improve the device, with its priorities on improving battery life, reducing response latency, and fine-tuning accuracy for “a smoother experience.”

No word on potential buyers, but I’ll just note that former Apple design chief Jony Ive is reportedly looking to build a company to make AI devices, including wearables.

Google lets you bypass AI summaries in search results — and skip the ads too

It’s been a busy month for Google, with the news from its I/O summit about how gen AI will be built into all its products and new services. Among those services are AI Overviews, AI-generated summaries (created with the company’s Gemini large language model) that stitch together information from a variety of sources across the web and are featured at the top of Google search results.

The company says its goal is to save users time and effort by providing them with answers to questions, instead of with just a list of the most popular or relevant links. But some people are criticizing the feature, saying they want the links and not Google’s version of the answers. Publishers also worry that Overviews will train users not to seek out original sources and will limit search traffic to their sites, robbing them of a key source of revenue.

As I noted in my column from I/O, the goal, said Google, is to do “all the Googling for you.”

Now Google has quietly launched a filter that allows you to get “nothing but text-based links,” CNET’s Peter Butler reported. “No ads, no AI, no images, no videos.”

“When the Web filter is selected, your search results will return only text-based links, with no advertisements, AI summaries or knowledge panels like ‘Top Stories’ or ‘People Always Ask,'” Butler explained. “The new filter should appear below the search box on the Google Search results page, among other filters like News, Images and Videos. You’ll usually have to click the three-dot ‘More’ menu to see it as an option.”

If you’re not a fan of AI Overviews or ads and sponsored content at the top of your search results, try the Web filter.

J-Lo saves the world from the first terrorist AI in Netflix’s ‘Atlas’

AI has already been a major theme in numerous movies, including Blade Runner, Ex Machina, Oblivion, Her and The Creator, and TV series, including Black Mirror, Dark Matter, and Westworld.

Now comes Atlas, streaming on Netflix, in which Jennifer Lopez stars as an intelligence analyst who has to outwit Harlan, the world’s “first AI terrorist” (played stoically by Simu Liu, of Shang-Chi and the Legend of the Ten Rings and Barbie).

J-Lo plays the title character, described by her boss as a brilliant but “not user-friendly” agent who likes strong coffee — quad Americanos — but isn’t a fan of AI. That’s understandable, given that her mother was killed by Harlan, who transforms from a domestic robot into an AGI villain intent on “saving” humanity by destroying many of his human overlords.

Here’s the (spoiler-free) plot twist: To defeat the AI that’s upended her life, Atlas needs to partner (literally — we’re talking about a neurolink) with an AI named Smith, who drives the high-powered armored space suit that’s keeping her alive on an inhospitable planet. The message is that humans plus AI can work together to be “something greater.”

I’m a fan of J-Lo and I’m all for her saving the world. But I think the script — and dialogue — let her down. (Case in point: “If we don’t stop Harlan, all life on Earth is doomed.”) And that’s too bad, because the flick also wastes the talents of a great cast, which includes Mark Strong and Sterling K. Brown.

You can decide if it’s worth your time: Here’s the trailer:

Editors’ note: CNET used an AI engine to help create several dozen stories, which are labeled accordingly. The note you’re reading is attached to articles that deal substantively with the topic of AI but are created entirely by our expert editors and writers. For more, see our AI policy.