Everyone is rushing to get the latest and greatest GPU accelerators to build generative AI platforms. Deploy other types of accelerators if you don't have access to a GPU or have a custom device that's better suited for your workload than a GPU.

The companies designing these AI computing engines have two things in common. First, companies use Taiwan Semiconductor Manufacturing Company as their chip-etching foundry, and many use his TSMC as their socket packager. And secondly, they haven't lost their minds. Looking at the devices launched so far this year, designers of AI computing engines are leveraging the latest advances in process and packaging technology to reap the benefits of products and processes that were very expensive to develop. Instead of trying to walk, I'm backing up a bit.

Nowhere is this better illustrated than by the fact that Nvidia's future “Blackwell” B100 and B200 GPU accelerators, which aren't even scheduled to begin shipping until later this year, are based on Taiwan Semiconductor Manufacturing Company's N4P process. A variant of his N4 process used in the previous generation “Hopper” H100 and H200 GPUs, which are also 4-nanometer products.

TSMC has long been clear that the N3 node will be a very important mass-produced product, and while Apple did not use the N3 node in its smartphone chips, Nvidia chose not to use it. , it is interesting that both AMD and Intel plan to use it in the future. CPU. Apple is reportedly using up all of TSMC's N3 capacity for its Arm-based smartphones and Mac PC processors. It looks to us like Nvidia is sticking with the N3 process, but Apple is also helping TSMC work out the kinks of this rather tricky 3-nanometer process, so Apple is also sticking with TSMC's N2 process. We envision that we will be in the driver's seat of a 2-nanometer nanosheet transistor process. In 2025, real competition will begin with Intel Foundry's reliable 18A process. However, TSMC has made clear that it believes its enhanced 3-nanometer process, called N3P, can compete with Intel's 18A.

Rumor has it that most of the $15 billion in advance reservations that Intel Foundry has on its books over the years are for Arm server chips and AI training and inference chips that Microsoft is building for itself and its partners' OpenAI. That is to say. And given the flag-waving national security flag and the reality of the need among all chip designers for a second source of chip suppliers, Intel expects to increase the number of external foundries by 2030. We believe that the company's goal of $15 billion in annual revenue is on the low side. We see Intel's internal product group for PC and server chips likely driving his $25 billion in additional foundry revenue. This equates to $40 billion, and no one knows how much profit Intel Foundry will make.

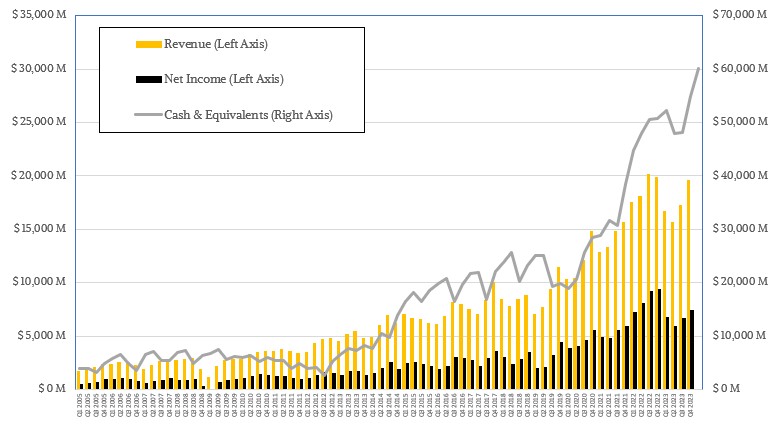

TSMC had sales of $69.3 billion and net income of $26.9 billion in 2023, a year that saw sales decline by 8.7% and net income decline by a harsh 21%. The decline in the PC market has caused considerable damage, and smartphone sales are also sluggish, but PC sales are flat and smartphone sales are recovering. Accelerating AI will create a new, large-scale, and highly profitable source of customers for chip manufacturers.

how much? Here are some tips from TSMC. TSMC executives said in its second-quarter 2023 financial report that 6% of its revenue, or about $941 million, came from manufacturing a computational engine that brings together AI training and inference.I think this was a local Minima Rather than the maximum value within each quarter of 2023 (That's difficult, difficult).

TSMC CEO CC Wei then commented on the company's first quarter 2024 financial results:

“Nearly every AI innovator is working with TSMC to meet the insatiable AI-related demand for energy-efficient computing power. Revenue contribution from some AI processors has more than doubled this year; We expect it to account for low 10% of our total revenue in 2024. Over the next five years, we expect AI processors to grow at a CAGR of 50% and even more than 20%. , GPUs, AI accelerators, are narrowly defined as CPU execution, training, and inference capabilities, and do not include networking edge or on-device AI. We expect it to be our strongest driver and the largest contributor to our overall revenue growth over the next few years.”

Well, this is usable data. So let's have some fun.

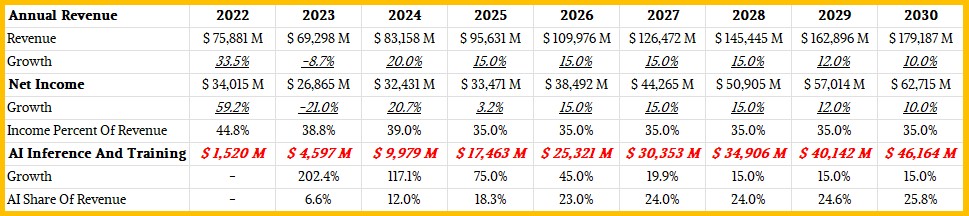

Considering a 6% tip in Q2 2023, a pre-teen percentage in 2024, 2x in 2024, and a CAGR of 50%, we can plot AI revenue as a percentage of total revenue through 2022 (AI These are based on a business forecast of 20% overall revenue growth in 2020, with subsequent revenue, revenue, and historical linear returns for AI Inference and Training averaged out and final. We assume that the share of revenue from AI accelerators will also level off (probably sooner than we think).

TSMC's average annual growth rate from 2005 to 2023 was 13.8%. Initially we just said it was going to be an average of 15% per year, but we're intentionally going to bend that curve down. We expect a 15% jump in 2023. The model also assumes that the net profit remains around 35%.

According to this forecast, TSMC is expected to have sales of $145.4 billion by 2028 by 2030, which will increase sales by $34.9 billion. This is because TSMC's AI chip sales in 2023 are projected to be $4.6 billion, so from 2023 to 2028, his CAGR is 50%. (This isn't the only way to solve this equation, but it's one that doesn't go against our sensibilities.) Not all GPUs are used for AI, so it really depends on the definition that TSMC uses. To do. Don't worry too much about the details. This is a thought experiment.

This is the point. If things go more or less as we expect under this model (which assumes there is no oversupply in the IT chip market and no major recessions or world wars), by 2030 TSMC's sales will could be around $180 billion, with sales of around $46 billion. It could be coming from the AI chipper.

So Intel may indeed be the second largest foundry after TSMC, but the difference would be about 4.5x. And the TSMC AI training and inference part of the chip foundry business will be a bit larger than Intel's overall foundry business.

This model obviously also assumes a healthy amount of highly complex CPU consumption for servers, PCs, and smartphones. This means that spending has doubled, and the computing power of these devices has probably increased by an average of 5x to 10x over 7 years.

Crazy, right?

So let's actually talk about TSMC's numbers for Q1 2024.

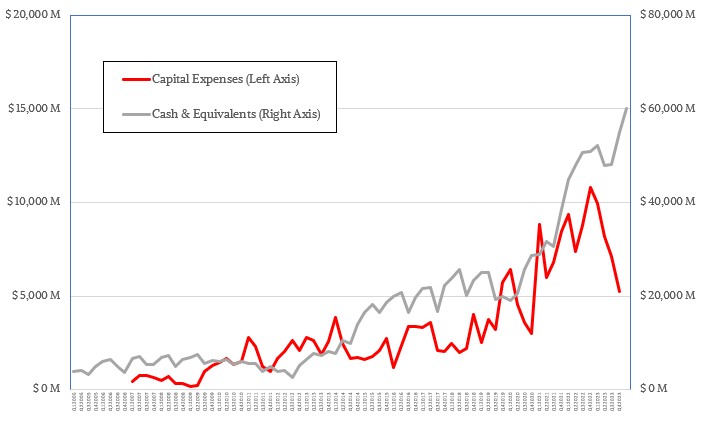

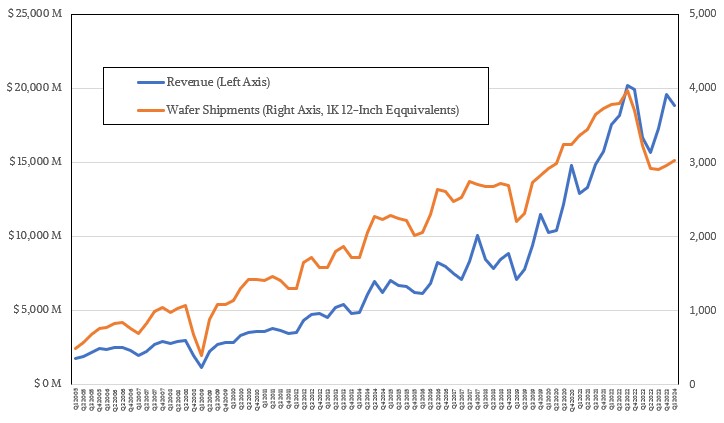

For the quarter ended March, TSMC posted revenue of $18.87 billion, down 3.8% from the previous quarter but up 12.9% from a year ago. Net income decreased 4.1% sequentially but increased 5.4% year over year to $7.17 billion.

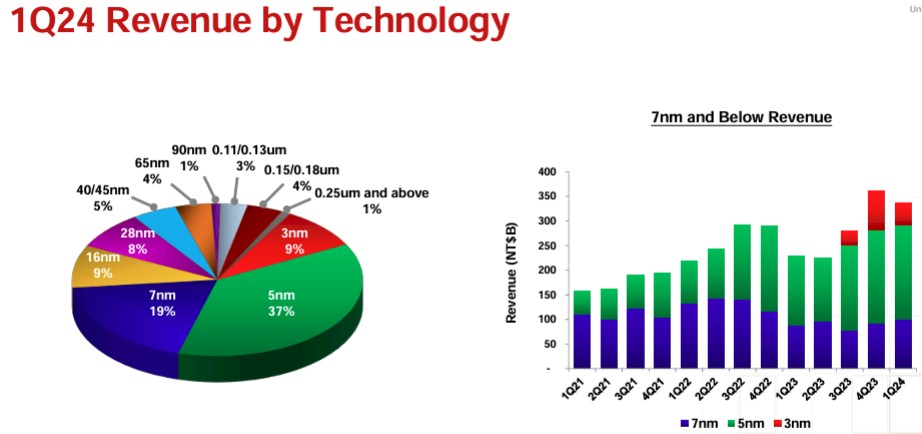

TSMC ended up with just over $60 billion in the bank at the end of the quarter, and although the company is investing in future 2-nanometer and beyond technology, it still has enough capacity to provide services. Capital expenditures were reduced by 42.1% to $5.77 billion. We address customer needs to manufacture chips in 7-nanometer, 4-nanometer, and 5-nanometer technologies. We assume that 3 nanometers is still in the ramp stage, and given the interest in 2 nanometers, some processes will jump from N4 to N2 processes and others from N5 to N3 processes. I think it is possible.

As for the N2 process, Wei said production will begin in the last quarter of 2025, with significant revenues expected from the end of the first quarter to the beginning of the second quarter of 2026. That seems like a long way off, but it really isn't. Wei also acknowledged that it took time for N3 to reach the same yields and margins as the previous N7 and N5 generations. Apart from N3 being more complex, TSMC also set the price for N3 years before the inflation boom, and despite raising the price, it still costs some high to provide it to customers. needed to be absorbed. For example, electricity prices in Taiwan rose 17% last year and 25% this year.

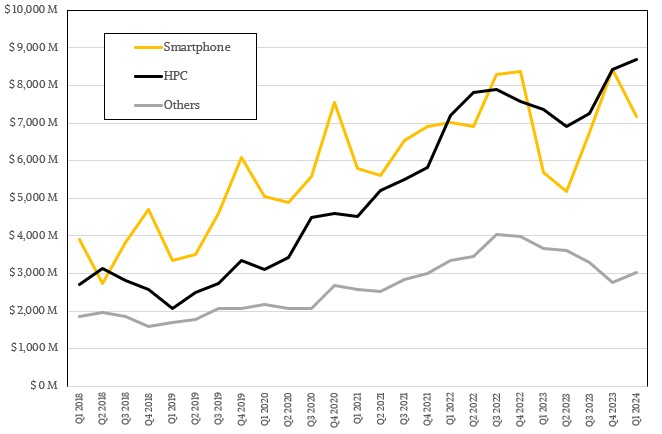

Another great thing about TSMC's Q1 2024 numbers is that the HPC segment doesn't just mean HPC simulation and modeling, AI training and inference, but rather CPUs, GPUs, and other high-performance advanced manufacturing. is what it means. Compute Engines was the largest segment in the fourth of the past five quarters. And in the remaining quarters, HPC partnered with smartphone chip manufacturing.

TSMC HPC division's revenue was $8.68 billion, an increase of 18% year-on-year. Smartphone chip sales rose 26.2% to $7.17 billion, but were down 16% from the fourth quarter of 2023, when smartphone and HPC sales were tied at $8.44 billion each. The IoT, automotive, and digital consumer electronics businesses have their own problems and don't add up to much, but from what we know, they're very profitable.

Even better news is that wafer shipments, measured in terms of 12-inch (300 mm) wafers, increased 2.5% quarter-over-quarter and remained stable at around 3 million wafers per quarter. That's still a far cry from the 3.82 million wafers TSMC averaged in 2022 and the peak of 3.97 million wafers it recorded in Q3 2022, before the wheels came off its PC and smartphone businesses. Just a few quarters later, the general purpose server market (i.e. not GPU-powered AI servers) went into recession.

So the last few years have been tough. As TSMC's rosy sales and profit forecasts suggest, things will likely improve in the future.

But the only way to accurately predict the future is to live it. And Heisenberg has a human touch that makes it hard to understand what's going on when he's actually alive. You can know your position or your velocity, but not both with equal precision.