“Pocket Penguin” lets you know that you are loved, cherished, and cherished. This is not a children's TV show. , iMental Health Chatbot.

AI-enabled chatbots, which are programs designed to simulate conversations with human users, are becoming increasingly common in the behavioral health market to solve the shortage of mental health clinicians.

Mental health chatbots can deliver cognitive behavioral therapy (CBT) and other therapy lessons through artificial intelligence (AI)-powered direct messages, as well as provide behavioral health triage for healthcare providers.

These chatbots, which benefit both healthcare professionals and patients, will be part of the behavioral health industry for the foreseeable future, industry insiders told Behavioral Health Business. The “Pandora’s Box” of mental health chatbots has been opened.

Wysa, one of the mental health and wellness platforms, features a penguin avatar on its app interface. The chatbot asks how you're feeling, listens to your open-ended responses, and offers lessons in guided meditation, breathing exercises, and cognitive behavioral therapy (CBT). He also tells jokes on command.

Based in Bangalore, Karnataka, India, Wysa has served more than 5 million people in 95 countries. Penguin has conducted her 500 million conversations with users.

In 2022, Wysa raised $20 million in a Series B funding round, bringing the total amount raised to just under $30 million.

We work with companies such as Harvard Medical School, L'Oréal, Aetna, and Bosch to provide services to their employees. It also collaborates with the UK's National Health Service (NHS) as a triage tool. Individuals can also download his Wysa directly from their smartphone app store, with the option to use the chatbot for free or pay for access to a human coach.

Chatbots are designed to be the first layer of mental health prevention, said Chaitali Sinha, Wysa's senior vice president of healthcare and clinical development.

“These are the people we primarily serve who normally don't have any assistance at all,” Sinha said.

Waisa

WaisaAccording to research published by JMIR mHealth and uHealth, frequent users of the Wysa app had a significantly higher average improvement in their self-reported depression scores than infrequent users.

Wysa is one of the mental health chatbots that has secured significant funding and touts compelling data.

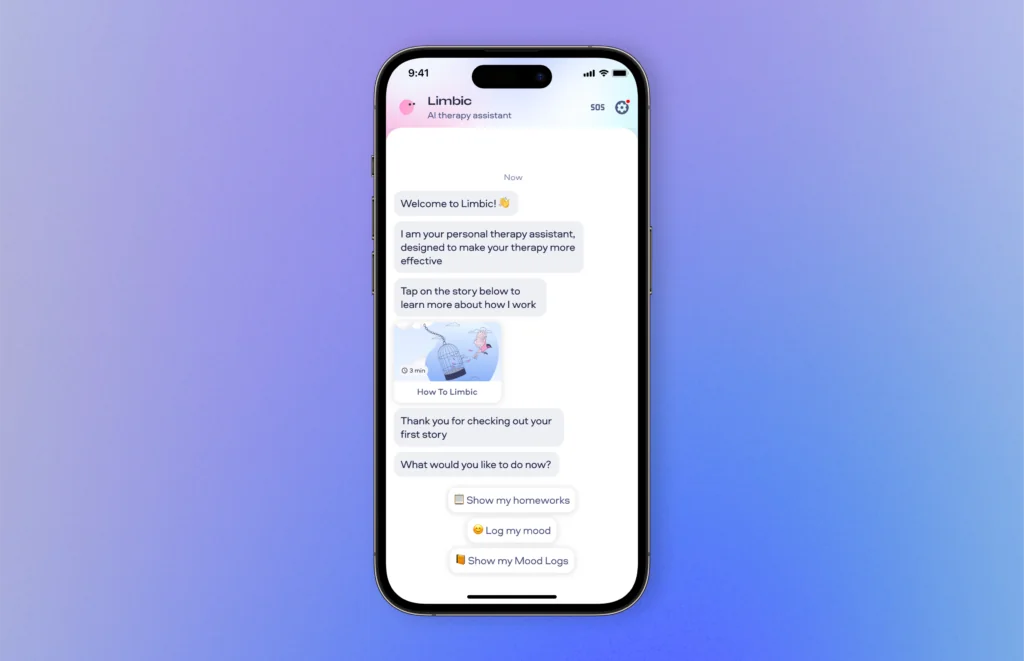

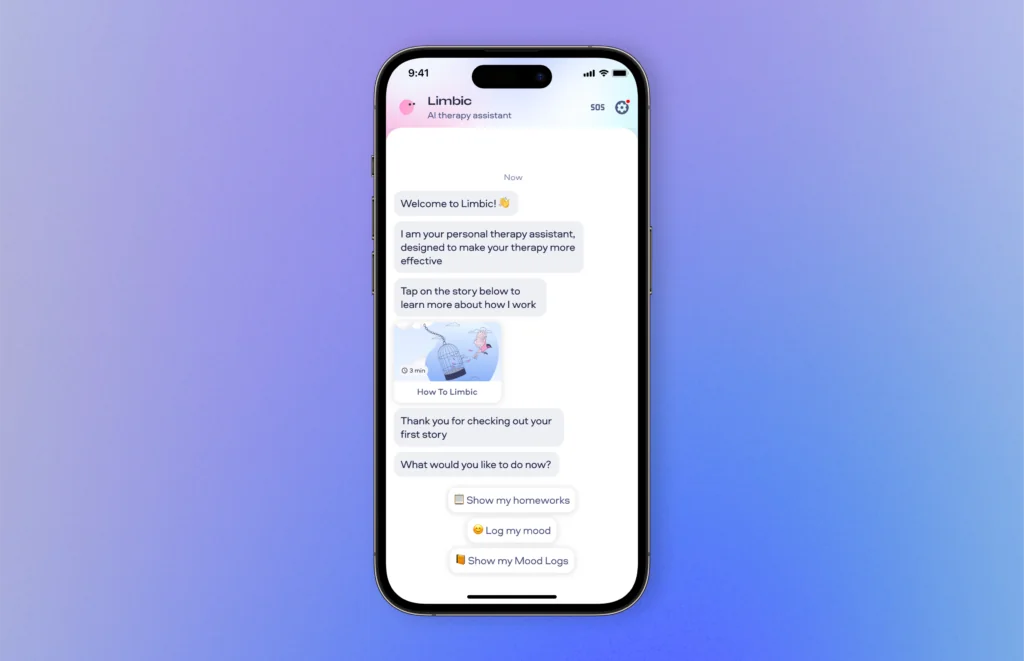

Based in London, UK, Limbic AI is trusted by the NHS and has helped over 250,000 NHS patients enter behavioral health care. The company plans to expand into the United States in 2024.

Limbic Access is the company's web chatbot that can reside on a healthcare provider's website or be embedded into a native app. According to Limbic founder and CEO Ross Harper, the bot handles intake, triage, and performs initial mental health screening in a “very compelling way.”

“This is not a web form deployment,” Harper said. “This will yield new results, have a significant and demonstrable impact on clinical outcomes, and reduce the cost of care. This provides excellent clinical evaluation that can lead to improvements in clinical outcomes.”

Limbic AI

Limbic AIThe product improved engagement and clinical recovery, and reduced churn and misdiagnosis.

Benefits to “Pocket Ally”

Chatbots offer significant benefits to patients and the behavioral health industry.

The app is available 24/7, so you can experience human-like interaction at any time.

Chatbots can also increase access to behavioral health for marginalized populations.

A new study published by Nature Medicine found that using the Limbic Access AI chatbot led to a 179% increase in non-binary patients accessing mental health support and a 29% increase in minority ethnic groups signing up. I understand.

“The fact that we are using AI means that it is easier for AI to verbalize sensitive thoughts and feelings,” Harper says. “Patients are willing to open up to non-judgmental AI.”

Also, because chatbots are race- and gender-neutral, marginalized groups are more likely to open up to chatbots, eliminating the problem of patients not feeling represented by clinicians. will be done. Additionally, the Limbic chatbot works proactively, making it clear to patients that they can benefit from the support options available to them.

Similar results have occurred with other chatbots.

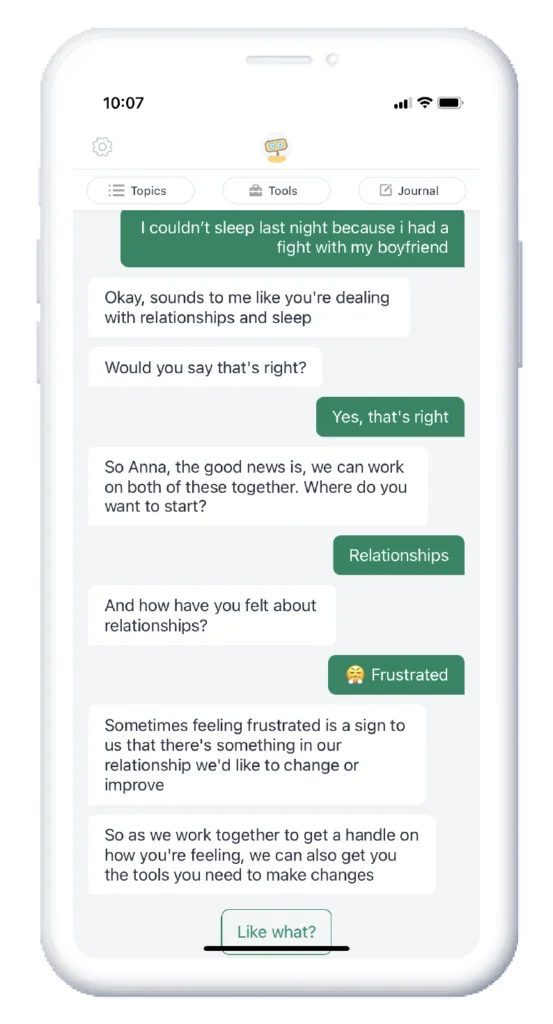

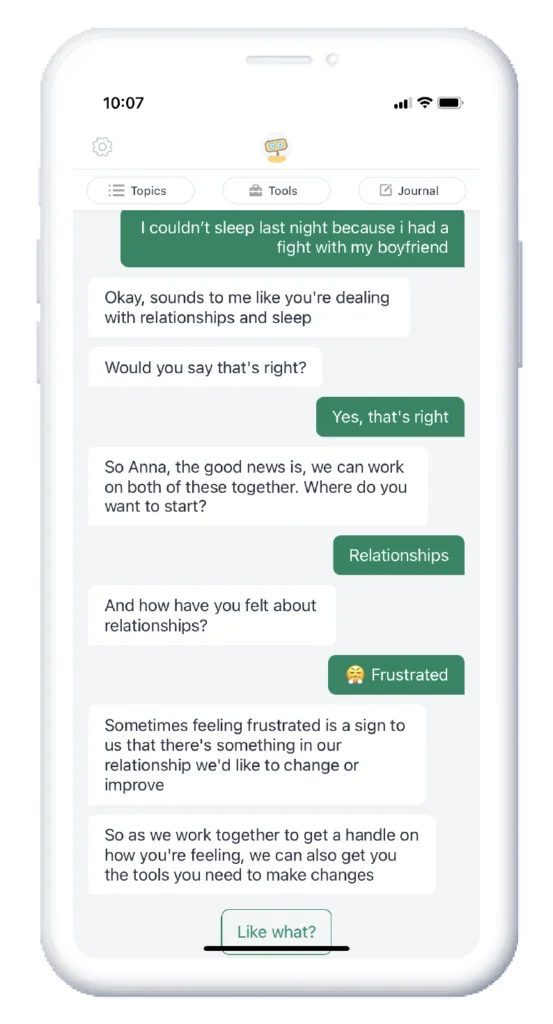

“The truth is, some of the most difficult conversations can be made easier with bots,” said Brad Gescheider, Chief Commercial Officer at Woebot.

Woebot Health, based in San Francisco, California, is partnering with both companies to give employees access to its chatbot (featuring a friendly-looking robot) and access to the health system by integrating it into existing clinical workflows. It offers. Woebot raised $9.5 million in 2022, bringing its total funding to $123.3 million.

Woobot

WoobotOne study found that Woebot users who experienced the greatest reductions in depressive symptoms and stress were more likely to be non-Hispanic black, self-identified as male, highly educated, and young and uninsured. There was found.

Chatbots not only help marginalized populations, but also alleviate issues related to the mental health workforce crisis.

“The biggest existential risk is doing nothing now because the vast majority of patients will not have access to treatment,” Gescheider said.

Sinha says training more therapists is not an option because the gap between demand and supply is so large. For example, people who receive care through the NHS experience long waiting times.

“There is value in creating a model that is more like a stepped care model,” Sinha said. “We need an effective way to triage people so we can see them at the right time. And if technology can take us there or help us do that, it's a powerful tool. You’ve put something to good use.”

Chat box risks

Digital healthcare tools often come with privacy-related risks that keep them up at night.

Wysa alleviates these concerns by keeping your data completely anonymous.

To promote privacy, Wysa keeps most users anonymous. When you download the Wysa app from the Apple App Store, you are not prompted to include identifying information.

There is also a risk that apps will miss clinical risks that users communicate.

Most apps provide emergency resources, such as a list of hotlines. However, not all bots can detect whether a person is in crisis, according to a study published in JMIR mHealth and uHealth.

The study looked at 10 mental health chatbot apps and analyzed over 6,000 reviews of the apps.

“None of the chatbots have intelligent algorithmic models to detect emergencies,” the study authors said. “It is up to the user to let the chatbot know that they are in crisis.”

Research shows that some chatbots can detect crises through keywords such as “suicide.” These programs are in the early stages of development, and people who just want to talk about their feelings are sometimes referred to crisis hotlines “due to lack of intellectual understanding.”

Wysa takes a unique approach to crisis intervention, allowing users to create a safety plan that includes emergency contacts, locations where users feel safe, and people they can contact.

“It's very dangerous not to have clinical oversight of all the responses that chatbots give to users,” Gescheider said. “It's clear that there are bad actors in this space who are taking advantage of technology without that level of oversight.”

“If you're not HIPAA compliant, if you're not going through additional design controls, if you're not adhering to how to properly build medical-grade software, I think you're putting your patients at risk,” he said. continued. .

Another risk associated with chatbots has to do with how useful the technology is perceived to be.

Chatbot companies are actively working on making their bots friendly and human-like. Woebot aims to establish “lasting collaborative relationships with users, similar to the bonds formed between humans.”

“Woebot does a great job of disarming users with humor and asking the right questions,” Gescheider said. “The truth is, some of the most difficult conversations are made easier with bots.”

But sometimes the connection with pocket friends can go too far. JMIR mHealth and uHealth research found that some users have an unhealthy attachment to chatbots, preferring them over their own support systems.

“This app has treated me more like a human than any family member ever has,” read one of the reviews included in the study.

This level of attachment can be unhealthy, said study lead author Romael Haq, a doctoral candidate and graduate researcher at Marquette University.

“This human interaction feels good, but it needs to be carefully designed,” he said.

The study recommends that bots prompt users to seek non-technical, human-like mental health support to alleviate over-attachment issues.

The future of chatbots

Chatbots could be widely deployed both as triage tools and as pocket companions.

“We have the potential to do this on a large public health scale,” Sinha said. “It's something that can easily cross countries. … It could be especially helpful in care in countries where there's only one doctor or psychologist in the entire country. Those are things I definitely dream of and hope for.” It’s about being.”

Haque would like to see the tools better regulated before they are widely adopted.

Current FDA regulations are not as strict, he said, and the tool itself requires further proof of concept.

“They say it's evidence-based, but it's actually not really evidence-based,” Haq said. “They're just offering evidence-based treatments. For example, meditation is an evidence-based treatment. So they tell you to meditate for 15 minutes. [and therefore] Wrap yourself up as if it were based on evidence. ”

Haque said that of the 10 chatbots studied, Wysa and Woebot are exceptions to this rule, as both have undergone extensive clinical trials.

Overall, Haque said he would recommend a chatbot to a friend unless the friend has significant mental health needs.

“You’re dealing with people who have mental health issues,” he said. “So they're really vulnerable.”